2021-01-17, 00:21

Um einige Klassifikations-Algorithmen in Python ausprobieren zu können habe ich heute die Swiss Banknote Data von Flury und Riedwyl benötigt. Die Daten sind im Netz z.B. unter http://www.statistics4u.com/fundstat_eng/data_fluriedw.html verfügbar, ich wollte sie aber nicht manuell einladen müssen.

Mit dem folgenden Code, adaptiert von https://hackersandslackers.com/scraping-urls-with-beautifulsoup/, kann man die Daten lokal abspeichern und dann in einen pandas Dataframe einladen.

import pandas as pd

import requests

from bs4 import BeautifulSoup

headers = {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Methods': 'GET',

'Access-Control-Allow-Headers': 'Content-Type',

'Access-Control-Max-Age': '3600',

'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0'

}

url = "http://www.statistics4u.com/fundstat_eng/data_fluriedw.html"

req = requests.get(url, headers)

soup = BeautifulSoup(req.content, 'html.parser')

a=soup.find('pre').contents[0]

with open('banknote.csv','wt') as data:

data.write(a)

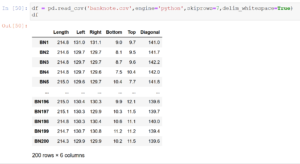

df = pd.read_csv('banknote.csv',engine='python',skiprows=5,delim_whitespace=True)

print(df) |

import pandas as pd

import requests

from bs4 import BeautifulSoup

headers = {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Methods': 'GET',

'Access-Control-Allow-Headers': 'Content-Type',

'Access-Control-Max-Age': '3600',

'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0'

}

url = "http://www.statistics4u.com/fundstat_eng/data_fluriedw.html"

req = requests.get(url, headers)

soup = BeautifulSoup(req.content, 'html.parser')

a=soup.find('pre').contents[0]

with open('banknote.csv','wt') as data:

data.write(a)

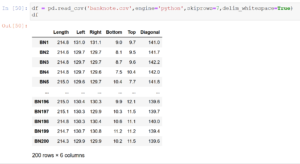

df = pd.read_csv('banknote.csv',engine='python',skiprows=5,delim_whitespace=True)

print(df)

Uwe Ziegenhagen likes LaTeX and Python, sometimes even combined.

Do you like my content and would like to thank me for it? Consider making a small donation to my local fablab, the Dingfabrik Köln. Details on how to donate can be found here Spenden für die Dingfabrik.

More Posts - Website

2019-11-24, 21:20

Mit dem folgenden Python-Skript lassen sich auf einfache Weise alle PDFs von einer Webseite herunterladen.

from bs4 import BeautifulSoup

import urllib.request

import requests

url = 'http://irgendeineurl.de'

r = requests.get(url)

data = r.text

soup = BeautifulSoup(data)

for link in soup.find_all('a'):

if link.get('href').endswith('.pdf'):

urllib.request.urlretrieve(url + link.get('href'), link.get('href'))

print(url + link.get('href')) |

from bs4 import BeautifulSoup

import urllib.request

import requests

url = 'http://irgendeineurl.de'

r = requests.get(url)

data = r.text

soup = BeautifulSoup(data)

for link in soup.find_all('a'):

if link.get('href').endswith('.pdf'):

urllib.request.urlretrieve(url + link.get('href'), link.get('href'))

print(url + link.get('href'))

Uwe Ziegenhagen likes LaTeX and Python, sometimes even combined.

Do you like my content and would like to thank me for it? Consider making a small donation to my local fablab, the Dingfabrik Köln. Details on how to donate can be found here Spenden für die Dingfabrik.

More Posts - Website